Conjugate Gradient Method

The Conjugate Gradient (CG) method is a powerful iterative algorithm for solving large, sparse linear systems of the form , where is symmetric positive definite (SPD). Unlike general iterative methods such as Jacobi and Gauss-Seidel, CG is specifically designed for SPD matrices and has become fundamental in scientific computing and numerical simulation.

Formulation as Quadratic Optimization

The conjugate gradient method can be elegantly understood as an optimization algorithm for minimizing the quadratic function: where is SPD. The unique global minimizer of is precisely the solution to .

The classical steepest descent method updates along the negative gradient direction , but this approach can suffer from slow convergence, particularly when is ill-conditioned. The CG method overcomes this limitation by searching along a carefully chosen sequence of directions that are -conjugate (or -orthogonal), meaning for . This conjugacy property ensures that progress made along one direction is never undone by subsequent steps, and the minimization along each direction becomes independent of the others.

Line Search

Given a sequence of conjugate directions , the problem of minimizing the quadratic function reduces to finding optimal step sizes such that closely approximates the solution .

The most straightforward approach is greedy line search: starting from an initial point , we select a search direction and then minimize with respect to . For quadratic functions, this optimization has a simple closed-form solution that avoids matrix inversion: The intuition is clear: we start at , choose a direction , and move along this direction until the objective function is minimized. While this may not reach the global minimum in one step, it guarantees progress toward the optimal solution.

This procedure is then repeated iteratively: at the new point , we select the next direction , compute the corresponding step size , and continue.

The general iteration process can be summarized as: where are the search directions and is the residual at step . Note that the residual can be updated without recomputing from scratch: . In practice, when becomes sufficiently small (typically below a prescribed tolerance), the iteration process can be terminated early to achieve better computational efficiency.

Conjugate Directions

The choice of search directions is crucial for the method's performance. If the directions are poorly chosen, convergence will be slow. In particular, gradient descent (which uses the steepest descent directions) exhibits slow convergence for ill-conditioned matrices .

In contrast, if we choose the directions to be mutually -conjugate: the algorithm achieves remarkable efficiency: there is no "zigzagging" behavior, and we obtain the exact solution in at most steps, where is the dimension of the system:

Without loss of generality, assume and set to be the initial residual. Since , the gradient at is , so we set . The remaining directions will be constructed to be -conjugate to all previous directions.

Let denote the residual at the -th step: Note that equals the negative gradient of at , so standard gradient descent would move in the direction .

To construct conjugate directions, we require that each new search direction be built from the current residual while being -conjugate to all previous search directions. We start with the negative gradient and orthogonalize it w.r.t. against all previous search directions using the Gram–Schmidt process:

Algorithmic Simplification

The expressions above can be significantly simplified, leading to the elegant final form of the conjugate gradient algorithm. The key insight lies in proving two fundamental orthogonality relationships.

Orthogonality Properties. We first establish that the following orthogonality relations hold:

Proof Sketch. From the residual update formula (Equation (34.3.4)), we can write recursively: Taking the dot product with on both sides: By the -conjugacy property (Equation (34.3.3)) and the step size formula , we can verify that for .

The second orthogonality relation follows from the fact that each residual lies in the span of the search directions by construction, and these directions are built from previous residuals.

Simplified Formulas. Using these orthogonality properties, the conjugate direction formula (Equation (34.3.5)) simplifies dramatically. Since for , we have:

This allows us to simplify the step size calculation:

For the conjugate direction update, we can show that only the most recent direction matters. Using the residual update formula and the orthogonality properties:

Applying the orthogonality relations (Equations (34.3.7) and (34.3.8)), this simplifies to:

This remarkable simplification shows that we only need to retain the most recent search direction to compute the next one .

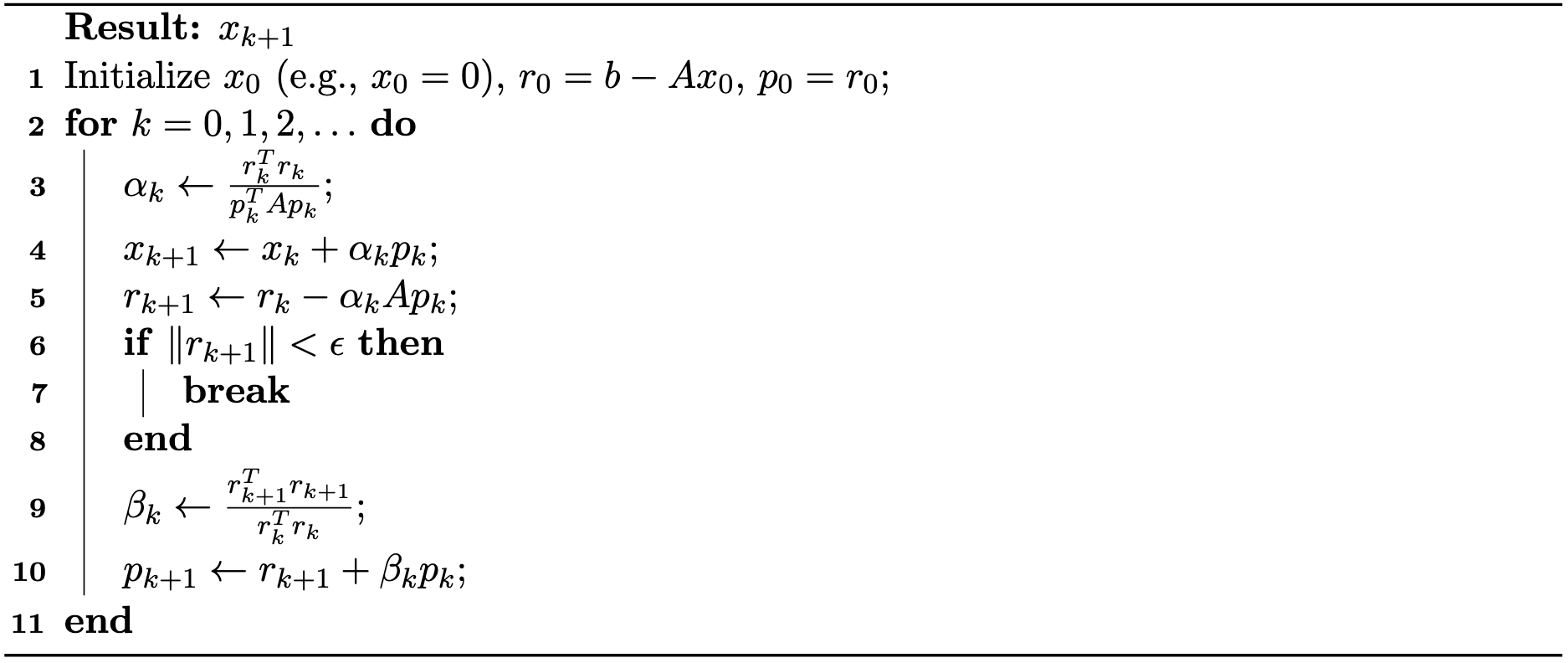

Final Algorithm. The simplified CG algorithm is summarized in Algorithm 34.3.1:

Preconditioning

The convergence rate of the conjugate gradient method is fundamentally determined by the condition number of matrix . When is ill-conditioned (i.e., has a large condition number), CG may converge slowly, requiring many iterations to reach acceptable accuracy.

Preconditioning is a crucial technique for accelerating convergence by transforming the original system into an equivalent one with more favorable spectral properties. The idea is to solve a modified system: where is a carefully chosen preconditioner matrix that approximates but is much easier to invert.

A simple yet effective choice is the diagonal (Jacobi) preconditioner, where contains only the diagonal entries of . The preconditioned CG algorithm then solves the transformed system while maintaining the essential structure of the original algorithm.

In practice, preconditioning amounts to scaling the residuals at each iteration by , which is computationally inexpensive when is diagonal. The preconditioned CG algorithm follows the same iterative structure as standard CG, but operates on the preconditioned residuals, often achieving significantly faster convergence for ill-conditioned problems.